Demystifying Principal Component Analysis: Handling the Curse of Dimensionality

Generally, in machine learning problems, we often encounter too many variables and features in a given dataset on which the machine learning algorithm is trained.

The higher the number of features in a dataset, the harder it gets to visualize and to interpret the results of model training and the model’s performance. Moreover, when dealing with such a massive dataset in terms of features, the computational costs should be considered.

It would be great if there was a method to eliminate or exclude some features or dimensions to obtain a smaller feature space.

This is where dimensionality reduction techniques come into play. As we will see in this article what is this concept, and the details of a powerful algorithm called Principal Component Analysis that reduce the dimensionality of the dataset.

That way, you get a better understanding of these types of problems and how you can solve them.

Dimensionality Reduction

Dimensionality reduction is a set of machine learning techniques for reducing the number of features by obtaining a set of principal variables. This process can be carried out using several methods that simplify the modeling of complex problems, eliminate redundancy, and reduce the possibility of overfitting.

Some many techniques and algorithms can reduce the dimensionality of data, such as feature selection processes, which applies both statistical measures and search methods to obtain a smaller feature space.

There are also linear methods ( — like PCA), principal curves, kernel methods, local dimensionality reduction, nonlinear auto-associators, vector quantization methods, and multidimensional scaling methods.

We also deep learning solutions that are classified as unsupervised learning — this subset has its neural network architecture that we call autoencoders.

Today, we’re going to talk about one of the foundational algorithms in dimensionality reduction — principal component analysis.

Advantages of Dimensionality Reduction

Whenever there are massive datasets, dimensionality reduction is what comes to mind for machine learning engineers and AI developers. It helps them perform data visualization and complex data analysis processes. Here are some other advantages of using these techniques:

- Reduces both space and time complexity required for machine learning algorithms.

- Improves the interpretation of machine learning model parameters.

- It makes it easier to visualize data when reduced to very low dimensions, such as 2D or 3D.

- Avoids the curse of dimensionality.

Example

Say we’re doing a simple classification problem that relies on both humidity and rainfall. In such a case, these features may overlap. But oftentimes these highly-correlated features can be collapsed into just one feature, which helps shrink the overall feature space.

Principal Component Analysis

Principal component analysis (PCA) is an algorithm that uses a statistical procedure to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components.

This is one of the primary methods for performing dimensionality reduction — this reduction ensures that the new dimension maintains the original variance in the data as best it can.

That way we can visualize high-dimensional data in 2D or 3D, or use it in a machine learning algorithm for faster training and inference.

PCA steps

PCA works with the following steps:

- Standardize (or normalize) the data: By default, this is the machine learning engineer’s responsibility.

- Calculate the covariance matrix from this standardized data (with dimension d).

- Obtain the Eigenvectors and Eigenvalues from the newly-calculated covariance matrix.

- Sort the Eigenvalues in descending order, and choose the 𝑘 Eigenvectors that correspond to the 𝑘 largest Eigenvalues — where 𝑘 is the number of dimensions in the new feature subspace (𝑘≤𝑑).

- Construct the projection matrix 𝑊 from the 𝑘 selected Eigenvectors.

- Transform the original dataset 𝑋 by simple multiplication in 𝑊 to obtain a 𝑘-dimensional feature subspace 𝑌.

Next, we dive deeper into the details of each step to more fully understand the concepts.

PCA in-depth

Here, we’ll be refreshing some foundational Linear Algebra and Statistics concepts in this section, alongside the details of the previous steps. The math is important here because it’s the core of the principal component analysis algorithm.

Data Standardization

Standardizing (or normalizing) the data before applying PCA is critical — this is because PCA will give more importance to those features having higher variances than to those features with extremely low variances during the identification of the best principle components to retain.

Say that you have a dataset with features that have different units — for example, one feature is in kilometers and another is in centimeters, and both have the same change in value. PCA will understand that the KM feature is reflecting minor changes, while the other is reflecting bigger changes.

In this case, standardization is required to tackle these issues; otherwise, PCA will give a higher preference to the centimeter variable.

Covariance Matrix

Variance in statistics means the measure of the variability or spread in a set of data. Mathematically, it’s the average squared deviation from the mean score.

Covariance is a measure of the amount or the degree to which corresponding elements from two sets of ordered data move in the same direction.

A covariance matrix generalizes the notion of variance to multiple dimensions (or multiple features). It describes the covariance for each pair of features values (or Random Variables).

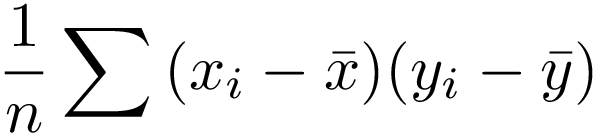

A covariance matrix can be calculated as follows:

What a covariance matrix does for PCA is simply describe the relationship between the variables so the algorithm knows how to reduce their dimensionality without losing a lot of information.

Computing Eigenvectors and Eigenvalues

The eigenvector of a matrix (linear transformation) is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it, whereas the eigenvalue is the factor by which the Eigenvector is scaled.

These concepts are at the core of PCA. We’re going to calculate those elements for our covariance matrix from the previous step. The Eigenvectors (or principal components) determine the directions of the new feature space, and the Eigenvalues determine their magnitude.

In other words, the Eigenvalues explain the variance of the data along the new feature axes.

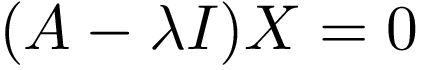

The Eigenvalue and Eigenvector of a matrix can be calculated as follows:

— Where 𝜆 is the Eigenvalue and 𝑋 is the Eigenvector.

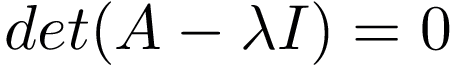

To solve the previous equation, we need a zero determinant:

With that, we find the required Eigenvalues and Eigenvectors.

Sort Eigenvalues

The typical goal of PCA is to reduce the dimensionality of the original features space by projecting it onto a smaller subspace — in other words, where the Eigenvectors will form the axes.

To decide which Eigenvectors can be dropped without losing too much information for the construction of lower-dimensional subspace, we need to inspect their corresponding Eigenvalues:

- The Eigenvectors with the lowest Eigenvalues carry the least information about the distribution of the data — those are the ones that can be dropped.

- The Eigenvectors with the high Eigenvalues carry more information about the distribution of the data — those are the ones that need to be preserved.

To do so, a common approach is to rank or sort the Eigenvalues from highest to lowest, and then choose the top k Eigenvectors.

Projection matrix

This is the easiest part: the construction of the projection matrix that will be used to transform the dataset into the new feature subspace.

The way to construct this matrix is to concatenate the top k Eigenvectors from the previous step.

Transform the original dataset

We’ve finally arrived at the last step. Here, we’ll use the projection matrix 𝑊 from the previous step to transform our samples into the new subspace.

We can achieve that using simple matrix multiplication, via the equation 𝑌=𝑋×𝑊, where 𝑌 is the matrix of our transformed samples, and X is our original matrix dataset.

Coding

Now that we’ve covered the steps and the math behind PCA, we’ll now look at the two ways to implement PCA — first by coding it ourselves, and second through the use of an external library.

Hard coding

To implement the algorithm ourselves, we need the NumPy functions. Then, using the aforementioned steps, we get the principal component analysis.

Here’s an implementation:

import numpy as np

def pca(number_compontents, data):

if not 0 <= number_compontents <= data.shape[1]:

raise ValueError('The number of features are less than the number of components')

# calculate the covariance matrix

cov_matrix = np.cov(data.T)

# calculate the eigen things

eig_vals , eig_vecs = np.linalg.eigh(cov_matrix)

# making eigen values and eigen vectors as pairs

eig_pairs = [(np.abs(eig_vals[i]) , eig_vecs[:,i]) for i in range(len(eig_vals))]

# Sorting All of Them

eig_pairs.sort(key = lambda x : x[0] , reverse= True)

# Getting the selected vector in a form of matrix

final = [eig_pairs[i][1].reshape(data.shape[1],1) for i in range(number_compontents)]

# Creating the Projection Matrix, multiplying by -identity is an addons

projection_matrix = np.hstack((final))

# transforming the data

Y = data.dot(projection_matrix)

return Y

# Give the dataset and the number of components

new = pca(number_compontents = 2 , x_train)

plt.scatter(new[:,0], new[:,1], c = y_train,s = 100, alpha = 0.5)

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.show()

Using an external library

Even if you can implement PCA yourself, you’ll probably end up like all other machine learning engineers and data scientists — using libraries to accomplish dimensionality reduction.

Here is a code sample that uses scikit-learn:

# Getting The PCA From sklearn library

from sklearn.decomposition import PCA

# create the object with your desired dimensions.

pca = PCA(n_components = 2)

# project the data in the new dimension space.

new = pca.fit_transform(x_train)

# Ploting The Data For Sklearn Result

plt.scatter(new[:,0], new[:,1], c = y_train,s = 100, alpha = 0.5)

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.show()

Resources

There are more examples and lessons out there that will help you start using PCA and dimensionality reduction in general. Here are a few I particularly like:

- This answer describes PCA in an easy-to-understand non-technical way.

- Introduction to LDA, another dimensionality reduction method.

- PCA in sklearn: documentation.

- Another way to calculate principal components using the SVD algorithm.

Conclusion

In this blog post, we saw that the curse of dimensionality can constitute a serious obstacle to the efficiency of the machine learning models — Having too many overlapping data dimensions.

As a solution, we explored the PCA algorithm that helps you reduce the dimensionality of data so the machine learning algorithms can operate in a faster and more effective way.

I hope I succeeded in conveying the concept and the details of the PCA to you. Understanding how these algorithms and other machine learning algorithms work is pretty delightful because it helps you debug problems in your data — and eventually to build better models.